Apache Hadoop is an open-source, java-based, parallel data processing engine and software framework. It breaks down the big data analytics tasks into smaller tasks that can be processed parallelly using the MapReduce algorithm and distribute across the Hadoop cluster. Hadoop cluster is considered as the heart of the Hadoop framework. This blog has been designed to get you familiar with Hadoop cluster, its architecture, the process to design Hadoop cluster, and advantages of clusters in Hadoop.

Following are the concepts that we are going to cover in this blog:

> What is Hadoop Cluster?

> The basic architecture of Hadoop cluster

> Types of cluster nodes in Hadoop

> Communication protocols used in Hadoop clusters

> Procedure to build an effective cluster in Hadoop

> Hadoop cluster management

> Advantages of Hadoop clusters

Let’s discuss each of the above concepts in detail.

1) What is Hadoop Cluster?

Hadoop cluster plays an important role in analyzing and storing big data. A Hadoop cluster consists of a group of computers, popularly known as nodes, that are networked together to perform parallel computations on large volumes of data sets. The capability to store and analyze the large volumes of structured and unstructured data in a distributed environment sets Hadoop clusters apart from all other computer clusters. Hadoop comprises a network of interconnected master and slave nodes that utilizes high performance, low-cost commodity hardware. The ability to scale linearly and swiftness to add or subtract the nodes based on the requirement makes Hadoop clusters highly suitable for big data analytics.

2) Basic Architecture of Hadoop Cluster

Hadoop cluster Architecture works based on two demons which are master Node and slave nodes. The Hadoop clusters consist of a network of master and slave nodes and these nodes perform various jobs on the Hadoop distributed file system. Let’s discuss one by one in detail.

Master in the Hadoop Cluster:

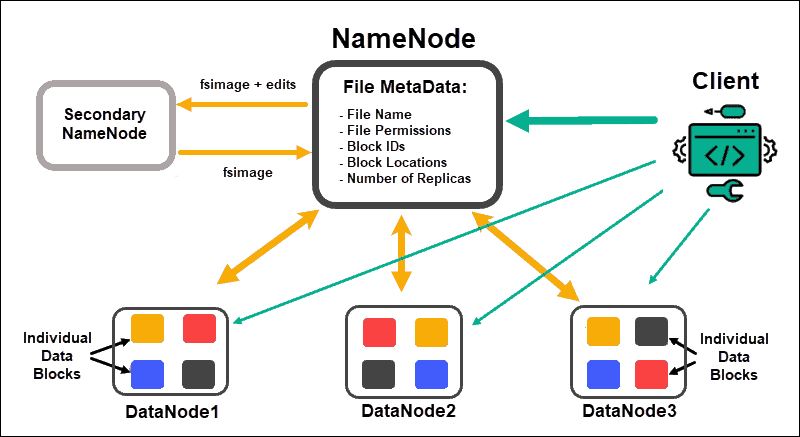

Hadoop Master is a machine which utilizes high-quality hardware and consists of two demons running on it which are NameNode and Resource Manager.

a) NameNode and its functions:

The NameNode runs on the master node of Hadoop and acts as a centrepiece of an HDFS file system. It resides on the storage layer of the Hadoop Distributed File System.

Explore the extensive Big Data Hadoop & Spark training offered at TrainingHub.io.

Functions of NameNode:

a. Stores the metadata of all the slave nodes of a cluster.

b. Uses the Heartbeat method to keep track of all the slave nodes and also checks whether they are working or dead.

c. Provides high reliability, availability, and fault tolerance.

d. Replicates the data on the slave nodes to various other SlaveNodes.

e. NameNode balances data replication.

f. Regulates clients' access to the files.

b) Resource Manager and its functions:

The Resource manager looks after scheduling applications and tracks resources in a cluster.

The function of Resource Manager:

> Resource Manager allocates the resources among the competing nodes

> Tracks the availability of nodes.

Slaves in the Hadoop Cluster:

In Hadoop, Slave nodes are the place where the actual data is stored and data processing takes place. We have two demons running on slave nodes to store and process data which are DataNode and NodeManger. Let's discuss the functions of each demon.

a) Functions of DataNode:

> The actual data is stored on DataNodes

> It performs low-level operations like reading, writing and processing data.

> Based on the instructions provided by master it performs the tasks such as creation, replication and deletion of data blocks.

> Hadoop clusters provide a good communication process between all the data nodes and they make sure that there is balanced and high replication of data in the system.

b) Functions of NodeManager:

> The NodeManager launches and manages containers on a node

> The NodeManager runs services on nodes to check their health status and send a report to the ResourceManager.

We can install and configure Hadoop on client nodes

C) Functions of the client node:

> Client node load the data into the Hadoop cluster

> Submits the MapReduce job to guide data processing.

> Gathers the output from a targeted location.

3) How many types of cluster nodes do we have in Hadoop?

In Hadoop we have two types of cluster nodes which are:

a. Single Node Cluster

b. MultiNode Cluster

The key difference between these two clusters is that the Single Node cluster can only be deployed on a single machine whereas the multi-node cluster can be deployed on multiple machines.

Single-Node Hadoop Cluster: In this Cluster, all the demons such as NameNode and Datanode run in the same machine. The single-node cluster runs all the processes on a single JVM instance. The user does not require to configure anything except setting the JAVA_HOME variable.

Multi-node Hadoop clusters: It has a master-slave architecture and demons run on the separate machine or host. In the Multi-node Hadoop cluster, the NameNode runs on the master machine and DataNode runs on the slave machine. Here in Multi-node clusters, the master demons like ResourceManager and NameNode run on powerful servers whereas the Slave demons like DataNode and NodeManger run on cheap servers. In multi-node clusters, the slave machines can be present in any location without taking the physical location of the master server into consideration.

4) Communication Protocols used in Hadoop Clusters

All the Hadoop Distributed File System (HDFS) protocols work on top of the TCP/IP protocol. A client uses a configurable TCP port to establish a connection with NameNode. The DataNode uses the DataNode protocol to communicate with NameNode. Hadoop cluster uses client protocol to establish a connection with the client. The Remote Procedure Call (RPC) abstraction encloses both the DataNode protocol and Client protocol. The NameNode does not consist of any RPC initiation; instead, it responds to RPC through the DataNode.

5) Procedure to build an effective cluster in Hadoop

Building a Hadoop cluster is a critical job because the entire performance of the system relies on the design of the cluster. An inefficient Hadoop cluster can weaken the performance of the entire system and leads to system failover. Here we are going to consider the ideal points one should consider while setting up a Hadoop cluster.

> One should consider the following points while choosing the appropriate hardware:

> The volume of data that the cluster is going to handle.

> The workload types will be used in the cluster.

> Data storage methods like data compression, data container techniques used, if any.

> The data retention policy.

Sizing the Hadoop Cluster:

Before choosing the size of a Hadoop cluster we should take two factors into consideration which are the data in hand and the amount of data generated on a daily basis. Having a clear idea of these two factors will help us in determining the required number of machines and their configuration. The main point is that there should be a balance between the performance and cost of the hardware approved.

Configuring Hadoop Cluster:

It is a challenging task to find the ideal configuration for the Hadoop cluster. In order to decide the Hadoop cluster, it is required to run a typical Hadoop job on a default configuration. This act will give us the baseline. After running a Hadoop job on a default server we get the job history log files on which we can conduct analysis to check if there is any weaker resource or time taken to run a job exceeded than expected. Continuing this process will help us in designing the Hadoop cluster that meets the business requirements.

6) Hadoop Cluster Management

When you deploy the Hadoop Cluster in production, it should be good enough to scale at multiple dimensions which include volume, velocity and variety. In order to use the cluster in production, it should also possess features like 24/7 availability, manageability, robust, and performance. Hadoop cluster management is one of the essential aspects of big data initiative.

Following are the features ideal cluster management should contain:

> It should possess the capability to diverse work-load management, resource provisioning, security, health monitoring, performance optimization. Also, it should support the tasks such as job scheduling, policy management, backup and recovery across single or multiple nodes.

> It should deploy redundant HDFS NameNode with hot standbys, load balancing, auto-failover and resynchronization.

> The tool should implement policy-based controls for preventing the applications from consuming the excess resources than required.

> Perform regression testing on all layers of software over Hadoop clusters. It ensures that there will be no crush of data or jobs or encounter any hurdles in the day to day operations.

7) Advantages of Hadoop cluster

> Hadoop clusters enhance the processing speed of big data analytics jobs because of its ability to break down the computational tasks into smaller tasks that are suitable to run in a distributed, parallel fashion.

> Hadoop clusters are highly flexible and scalable. It can be possible to add nodes to improve throughput and maintain the required processing speed.

> Hadoop clusters use high availability, low-cost commodity hardware which makes them cost-effective and easy to set up & manage.

> The replication feature of Hadoop cluster helps in replicating the data across the distributed file system and eliminates the issues of cluster failure and data loss.

> Hadoop clusters easily integrate and leverage data from different data formats and sources.

Conclusion: Hadoop cluster plays an important role in analyzing and storing big data. Clusters enhance the processing speed of big data analytics jobs because of their ability to break down the computational tasks into smaller tasks. There are various tools available for effective management of Hadoop clusters, few of them are Ambari, Cloudera manager etc. Hope this blog has helped you in finding some useful information about Hadoop clusters.

Happy learning. Check out our Big Data Hadoop & Spark training by TrainingHub.io.

Suggested blogs

Recent blogs

21 February 2025

|

06 February 2025

|