Before we enter into this Apache Oozie blog, let us have a clear understanding of where the scheduler systems are used in Hadoop. We know that Hadoop jobs depend on one another, like the output of a MapReduce task may become the input for the Hive jobs for further processing. And also we schedule a group of tasks based on time like daily, weekly, or based on the availability of data. Apache Oozie will help you with the power to manage all types of job scheduling tasks in Hadoop. This has made it an important part of the Hadoop ecosystem. In this Apache Oozie blog we are going to cover the below concepts:

> What is Oozie?

> Why use Apache Oozie?

> Ozie Workflow diagram

> Packaging and deploying an Oozie workflow application

> Apache Oozie Features

Let’s discuss each topic in detail.

> What is Apache Oozie?

Apache Oozie is a Java web application specialized in scheduling Hadoop jobs. Oozie follows a sequential approach to combine several jobs into a single logical unit. It is integrated with Hadoop stack and supports various Hadoop jobs for Apache Pig, Apache MapReduce, Apache Sqoop, and Apache Hive. Oozie also has the capability to schedule jobs specific to a system, such as shell scripts or Java programs. Apache Oozie tool handles multiple Hadoop operations and simplifies the task of cluster administrators in building complex data transformation out of several component tasks. It gives you greater control over the jobs and also makes it easier to repeat those jobs at specified intervals.

Check out our Big Data Hadoop & Spark training by TrainingHub.io

Oozie consists of the following two jobs:

a. Oozie Workflow Engine: The responsibility of the workflow engine is to store and run workflows of Hadoop jobs e.g, Pig, MapReduce, Hive.

b. Coordinator Engine: This function executes the workflow jobs based on the predetermined schedules and availability of data.

> Why do we use Apache Oozie?

The core idea behind using Apache Oozie is to manage the different jobs processed by the Hadoop system. As we know there are dependencies between Hadoop jobs and these dependencies are specified by the users in the form of DAG (Directed Acyclic Graphs). Oozie consumes the DAG information and executes the jobs in the right manner. This is how Oozie helps in saving the time taken to manage workflows. Moreover, you can also specify the frequency for executing a particular job by using Oozie.

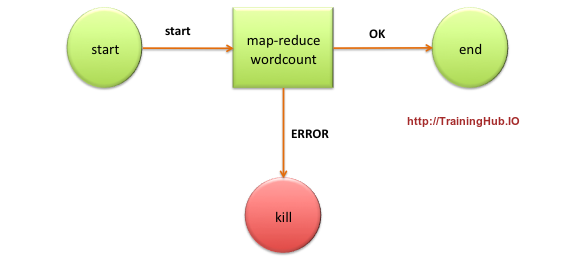

> Ozie Workflow diagram

The Oozie Workflow is defined as a collection of actions pipelined in a Directed Acyclic Graph (DAG). Control nodes direct the chronology of a job and define rules for starting and ending of a workflow. Following diagram illustrates exactly how workflow happens in Oozie.

> Packaging and deploying an Oozie workflow application: A Workflow application consists of many elements and some of the essential elements are workflow definition and associated resources such as Pig scripts, MapReduce Jar file etc. All the applications are required to follow a simple directory structure and they should be deployed to a Hadoop Distributed File system so that the Oozie can access them very easily.

Let us consider an example of directory structure here:

<name of workflow>/</name> ??? lib/ ? ??? hadoop-examples.jar ??? workflow.xml

The Lib directory in the above command consists of Jar files containing MapReduce classes. It is also essential to keep workflow.xml in the parent directory using the workflow name. We can use either Ant or Maven tools to build workflow applications for this layout.

Following is the example command used to copy the Workflow application to HDFS:

% hadoop fs -put hadoop-examples/target/<name of workflow dir> name of workflow

Following are the steps involved in running an Oozie workflow:

Here we are going to learn how to run a workflow job. To run a workflow we use the Oozie command-line tool (here it is a client program which provides communication to Oozie server).

1) Export OOZIE_URL environment variable which guides the Oozie command which server needed to use (in this case we are using a local server).

% export OOZIE_URL="http://localhost:11000/oozie

2) Run Workflow job using following command:

% oozie job -config ch05/src/main/resources/max-temp-workflow.properties -run

This configuration option here indicates a local Java properties file that contains definitions for the workflow XML file. And also the local Java properties file consists of oozie.wf.application.path, which directs the Oozie to the location of the workflow applications in HDFS.

A properties file contains the following content:

nameNode=hdfs://localhost:8020 jobTracker=localhost:8021 oozie.wf.application.path=${nameNode}/user/${user.name}/<name of workflow>

3) Process to get the Status of Workflow job: We can get the workflow job status by using the subcommand ‘job’ with ‘-info’ and also by specifying the job id after ‘-info’.

% oozie job -info <job id>

The output shows the status of RUNNING, KILLED or SUCCEEDED workflow jobs.

4) We can view the result of successful workflow jobs by using the following Hadoop command:

% hadoop fs -cat <location of result>

> Apache Oozie Features

a. Command-line interface, as well as Client API, is present in Oozie that are helpful in launching, monitoring and controlling jobs from the Java application.

b. We can control jobs from anywhere by using its Web Services APIs.

c. If a job is completed then the same will be notified through an email notification.

d. Oozie also helps in executing the jobs which are scheduled to run periodically.

Conclusion: This is all about the Oozie tutorial. So far we have learned what is Oozie? Why it is used, Workflows in Oozie, and features of Oozie. Hope this blog has helped you in finding some useful information.

Check out our Big Data Hadoop & Spark training by TrainingHub.io. Happy reading!

Suggested blogs

Recent blogs

21 February 2025

|

06 February 2025

|